Model Exports

Exporting models for deployment to personal or edge devices

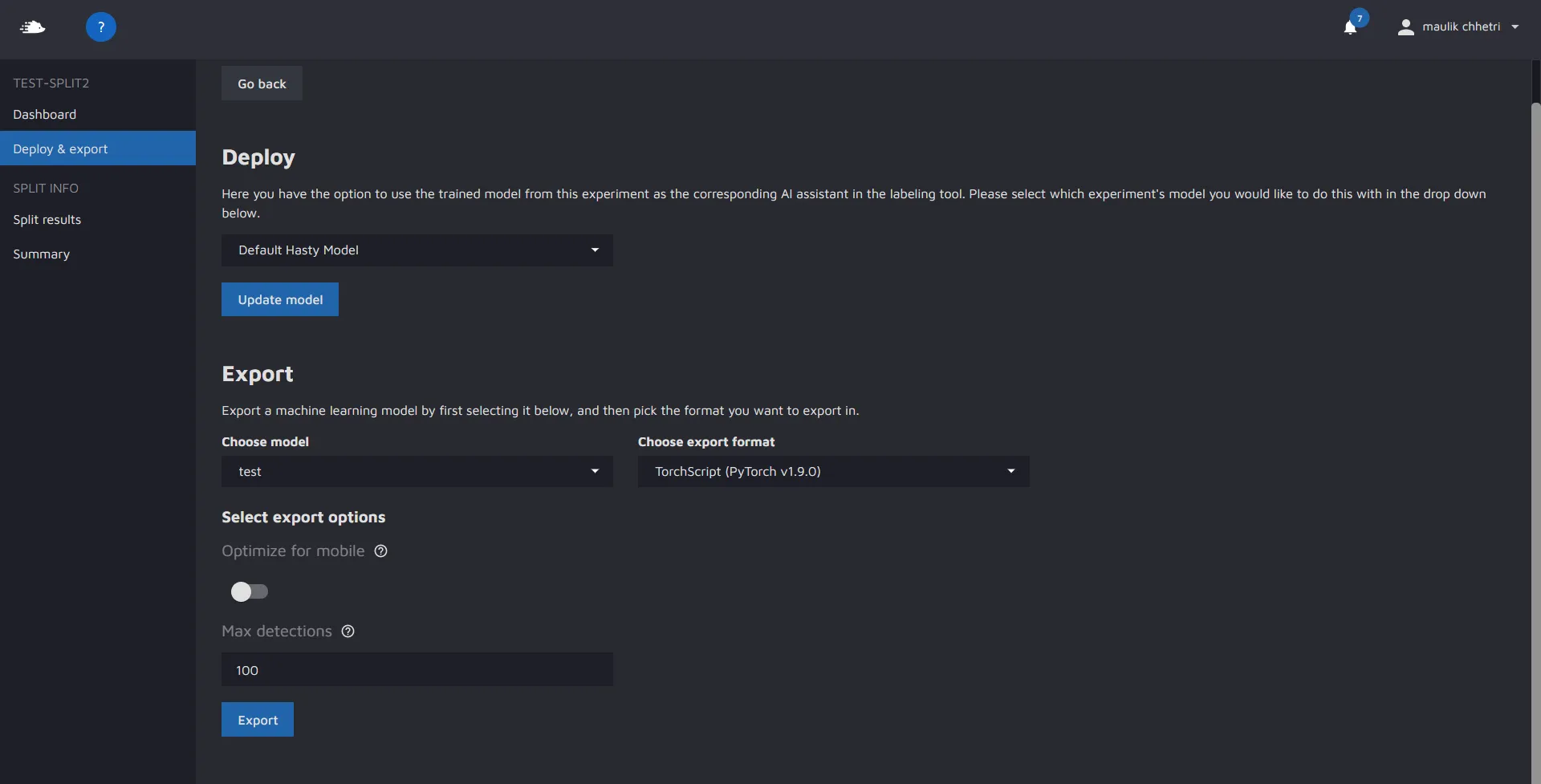

In Model Playground, you have the option of exporting the best model from an experiment. To do so, you need to navigate to the "deploy and export" page inside your split, as shown:

In the export section, you can choose which experiment you would like to export the model from, and in what format.

Additional export options:

- Optimize for mobile - allows users to optimize the model for execution on a mobile device. To do so, Hasty uses the PyTorch mobile optimizer utility that performs a list of optimizations with modules in evaluation mode. To learn more about the exact optimizations, please refer to the official PyTorch documentation.

- Max Detections - allows users to return a maximum number of detections per image during the inference.

Currently, all model families support TorchScript exports. This format can be run on CPU, GPU, and some can also be run on Android or iOS devices (depending on the model you choose). To read up on TorchScript, we recommend looking at the official documentation provided by Pytorch:

- TorchScript Documentation

- Loading TorchScript in C++

- TorchScript model on iOS

- TorchScript mode on Android

- Pytorch Mobile

Exported model files:

Once you export the model and download the export file, you will need to unzip it. Unzipping it will leave you with a folder which contains the following files:

- 'model.pt' - A torchscript compatible model file.

- 'config.yaml' - A summary of your experiment settings - including the transforms you used.

- 'class_mapping.json' - A json file that maps the class integers predicted by the model to the class names that were present in your project.

- 'transforms.json' - A json file that contains the serialized transforms used for testing/validating.

These 4 files provide all the info needed to correctly use/deploy the model on edge. We have provided some sample inference scripts for you to get started in Python. You can find these on the next pages.