Efficient Net

Efficient nets are the family of neural networks with the baseline model constructed with Neural Architecture Search.

With the help of a neural architecture search that optimizes both accuracy and FLOP (Floating point Operation), we firstly create the baseline model called EfficientNET-B0.

Starting with this baseline model, we perform compound scaling on it and create family of Efficient net models from EfficientNetB1 to B7.

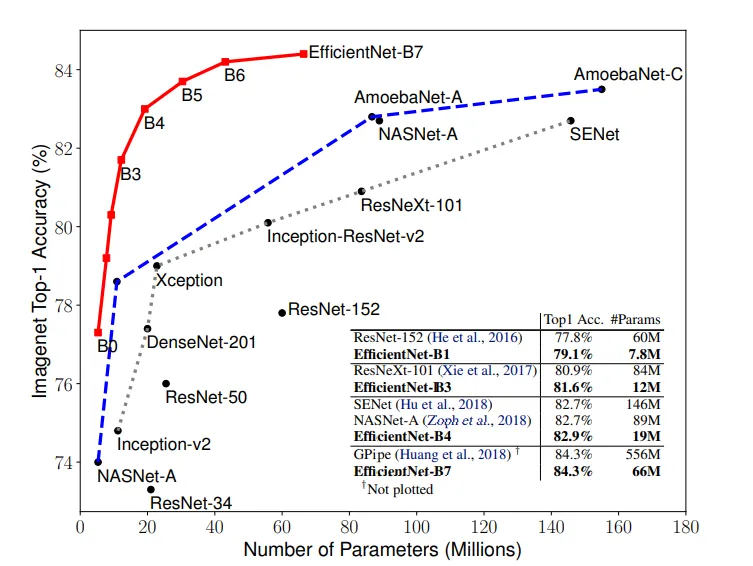

The neural networks proved to work with less parameters and with more accuracy compared to other state of the art neural networks.

We can see that the number of parameters in the Efficient net family is significantly low compared to other models.

Parameters

EfficientNet sub type

The hasty tool lets you choose from these different Efficient net.

Note that there is a trade off between the number of parameters and accuracy going from EfficientNetB1 to B7.

Weight

It is the weight that is used for model initialization. Here, we use the the weights of the EfficientNetB0 found on Image Net dataset.