Weight Decay

The weight decay hyperparameter controls the trade-off between having a powerful model and overfitting the model.

Intuition

Generally speaking, we want to make sure that our model is complex enough to map the relationship between input and output. In neural networks, this effect can be achieved by choosing weights that are far away from each other.

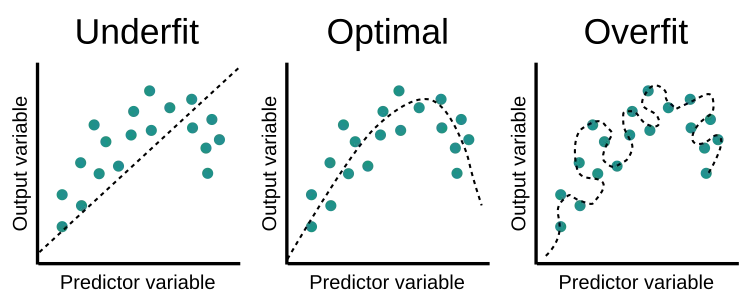

However, at the same time, the model shouldn't be too complex so that it doesn't overfit and doesn't generalize anymore.

Adding weight decay proposes a solution to overfitting your model by adding a term to the loss function for the distance between weights, typically in the form of an L2 normalization. This causes the optimizer to minimize not only the loss but also the distance between the weights. The loss function becomes:

loss = loss + weight decay parameter * L2 norm of the weights

But be careful; adding too much weight decay might cause your model to underfit.