Epoch

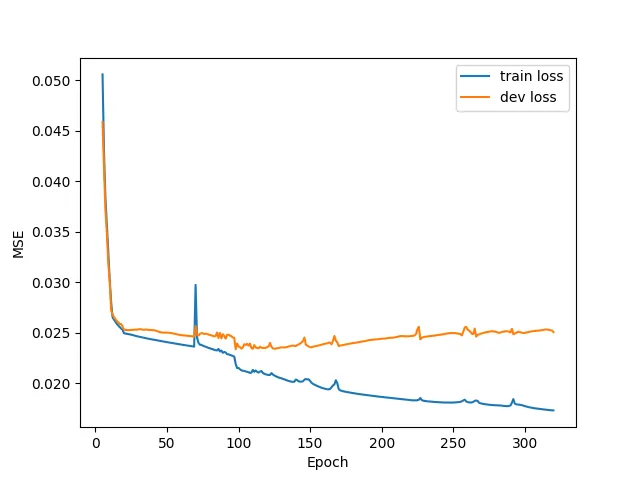

The number of epochs is a hyperparameter that defines the number of times that the learning algorithm will work through the entire training dataset. We use all of the data exactly once per epoch.

- The total training set of images = 5000

- Batch Size = 64

- Iterations = 78 ∵(5000/64 ≈78)

Hence, once you do 78 iterations, it means you are done with one epoch and the network has seen the entire training data once.

If we choose to have X epochs, the network will be seeing the entire data X times, during which weights are updated. Hopefully, after X epochs, or even before that, it should find the minimum.

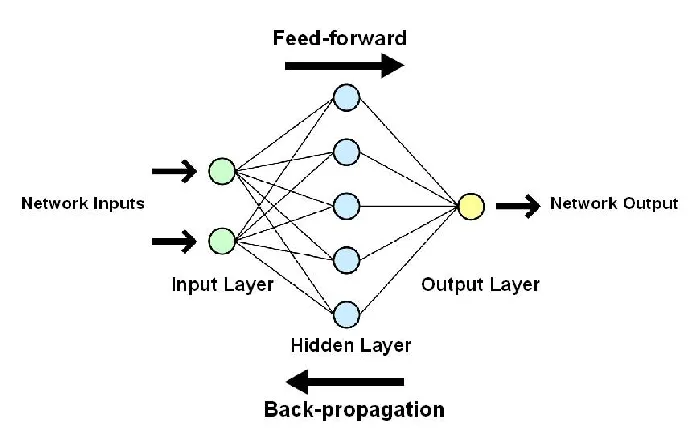

As the full dataset is passed multiple times to the same neural network (increasing epochs), the loss decreases. Still, as the number of epochs increases, the weights are changed more and more in the neural network, which thus may lead to overfitting.