Base Learning Rate

The learning rate defines how large the steps of your optimizer are on your loss landscape. The base learning rate defines at which learning rate your optimizer starts before applying any methods like momentum or dampening.

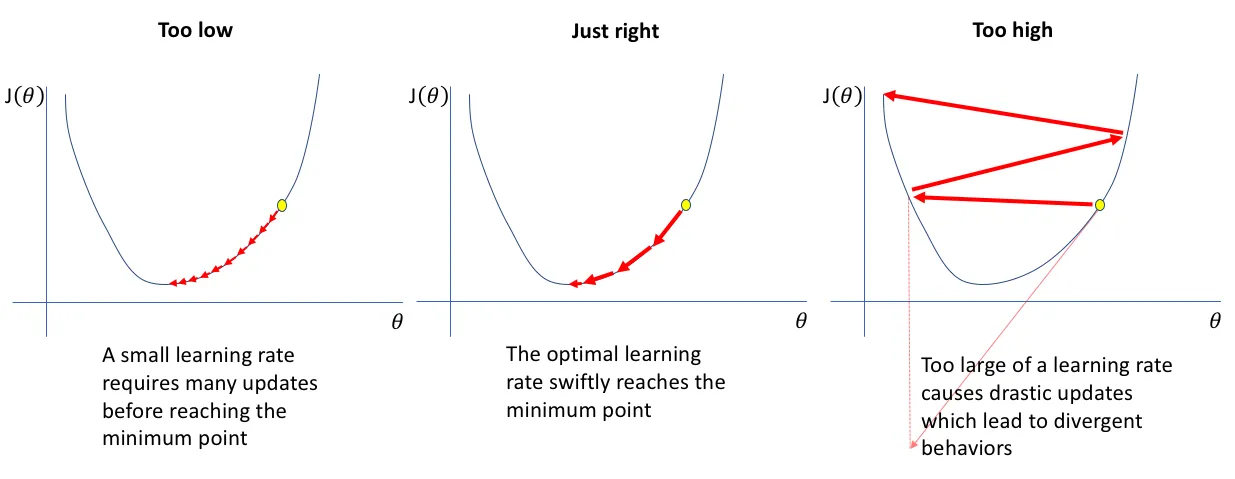

Choosing the right learning rate means balancing the trade-off between reaching the minimum quickly and not making such big steps that you miss it.

A learning rate of 0.1 means that the weights in the network are updated by 10% of the estimated weight error each iteration.