CosineAnnealingLR

If you have ever worked on a Computer Vision project, you might know that using a learning rate scheduler might significantly increase your model training performance. On this page, we will:

- Сover the Cosine Annealing Learning Rate (CosineAnnealingLR) scheduler;

- Check out its parameters;

- See a potential effect from CosineAnnealingLR on a learning curve;

- And check out how to work with CosineAnnealingLR using Python and the PyTorch framework.

Let’s jump in.

CosineAnnealingLR explained

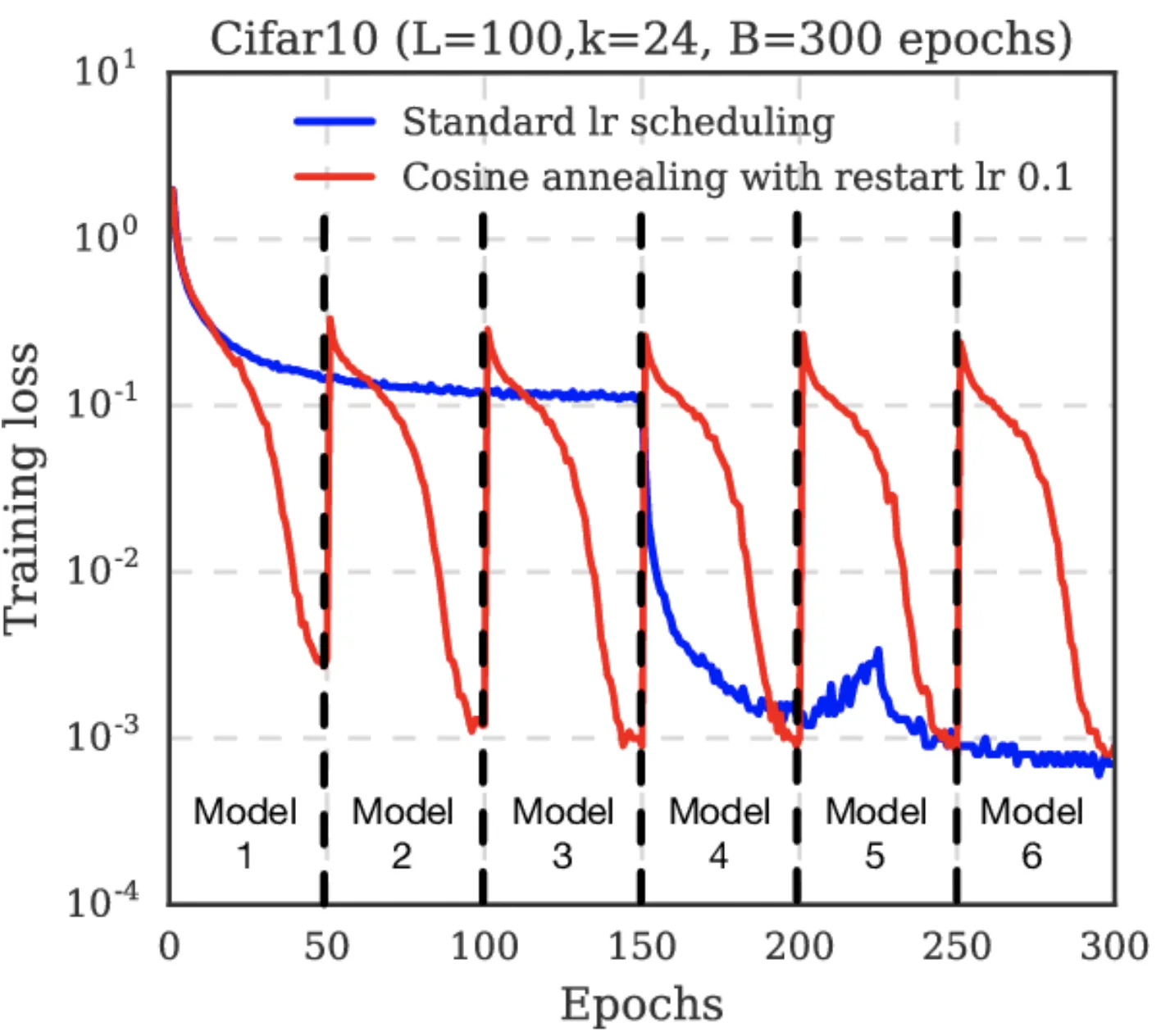

CosineAnnealingLR is a scheduling technique that starts with a very large learning rate and then aggressively decreases it to a value near 0 before increasing the learning rate again.

Each time the “restart” occurs, we take the good weights from the previous “cycle” as the starting point. Thus, with each restart, the algorithm approaches the minimal loss closer.

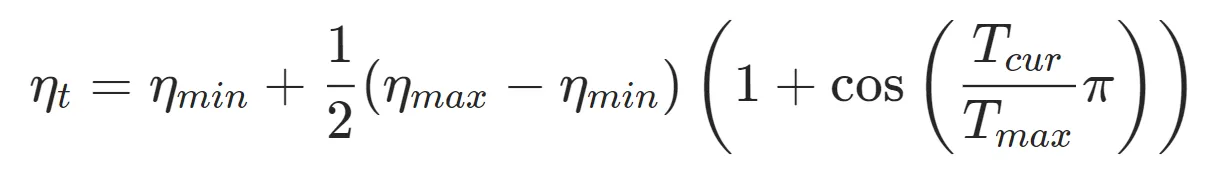

Below is the formula for the learning rate at each step:

In this formula:

- η_min and η_max represent ranges for the learning rate, with n_max being set to the initial LR;

- T cur represents the number of epochs that were run since the last restart.

Parameters

- T max - the maximum number of iterations;

- Eta min - the minimum learning rate achievable.

CosineAnnealingLR visualized