Dataset Split in Machine Learning

When building a Machine Learning solution, you will inevitably face the training stage of the ML pipeline. As the name suggests, it is precisely the time for your model to learn based on the proposed data. However, boldly feeding the data to the model is a mistake you should avoid. Accurate training and validation are possible only if you intelligently split the initial dataset. Such techniques are known as Data Splitting.

On this page, we will:

Define the Data Splitting term;

Understand what training, validation, and test sets are;

Check out the standard ratios used when splitting the data;

Understand why Data Scientists split the data before training;

Review the advanced data splitting techniques;

Show how to utilize all the subsets properly during the training process.

Let’s jump in.

How to split a dataset?

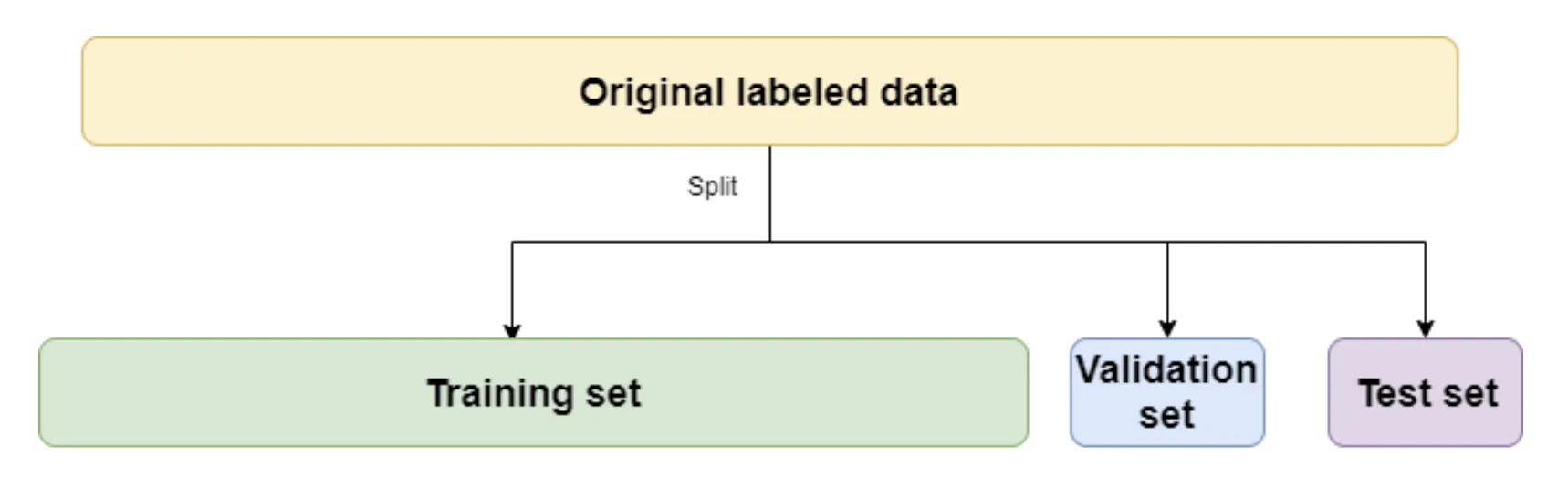

In Machine Learning, the Data Splitting term refers to several techniques Data Scientists apply to the data before the training stage to split the dataset into two or more subsets.

These subsets are used at different stages of the training process to train, validate, and test the model.

Let’s check out the difference between these sets.

Source

What is a training set in Machine Learning?

The training set is the subset of the original dataset used to train the model, i.e., extract valuable features from the data and optimize the model’s parameters to solve the task.

The training set should be the largest and most diverse subset, as its quality significantly impacts the end result.

What is a validation set in Machine Learning?

The validation set is the subset of the original dataset used to evaluate the model’s performance on training. Data Scientists view it as an indicator that the training process goes as intended and use the validation results to tune the model’s hyperparameters if needed.

It is vital to remember that the validation set should be distinct from the training set, i.e., the validation samples must not be in the training set and vice versa. If such a scenario occurs, the validation results will be inaccurate, potentially leading to improper decisions and analysis.

What is a test set in Machine Learning?

The test set is the subset of the original dataset used for the final assessment of the model’s performance. In other words, the test set is applied to the model after the training process is over to confirm that the model is trained.

It is vital to remember that the test set should be distinct from the training and validation sets, i.e., the test samples must not be in the training or validation sets and vice versa. If such a scenario occurs, the test results will be inaccurate, potentially leading to improper decisions and analysis.

Common train, test, and validation ratios in dataset split

The golden standard is splitting the dataset into training, validation, and test sets.

The most common ratios of these sets you can come across in research papers and real-life projects are:

80% training set, 10% validation set, 10% test set (80/10/10) - you want better training for your model;

70% training set, 15% validation set, 15% test set (70/15/15) - you want better evaluation of your model.

Why should you split a dataset?

Training a Machine Learning model is impossible without data splitting because you need to validate your model on unseen data, which can be easily obtained only if you split the original dataset into several subsets.

Therefore, to be precise and accurate, you must split the data at least once. Adding another split to create three subsets is something Data Scientists came up with over experimentation in searching for the best practices.

Advanced dataset split techniques

Technically, you can do data splitting manually and say that the first 700 data assets are the training set, the next 150 are the validation set, and the final 150 are the test set. However, opting for such an approach might be a mistake as it does not guarantee you building diverse subsets (for example, if your dataset is sorted). Therefore, Data Scientists developed some improvements.

Random dataset split

Random sampling is the most straightforward split strategy that is effective in most cases.

The idea is to randomly assign each data asset to one of the subsets according to their desired sizes. Such a split is performed without replacement, i.e., training, validation, and test sets do not overlap to prevent the data leak.

Data Stratification

Stratification addresses the class imbalance problem and might help achieve more reliable performance if the dataset has just a few samples of particular annotation.

In detail, stratified split ensures that each subset preserves the proportion of each label from the original dataset. For example, if the original data had 20 cats and 80 dogs, the training set would have 5 cats and 20 dogs, keeping the 1 to 4 ratio in place.

Stratification is the correct choice when the data is skewed regarding the labels.

How to train a model on a train, validation, and test sets?

Getting the subsets is just the first step of the training process. The general algorithm you should stick to utilize the subsets properly is as follows:

Feed the training set to your model;

Validate the model’s performance on the validation set after every training epoch;

Analyze the results obtained on validation and tune the model’s parameters based on the analysis (if needed). Technically, such an action means that you create a new, untrained version X of your model;

Proceed with steps 1 - 3 until you are satisfied with the model’s performance on the validation set;

Analyze all the versions of the model and pick the one that performs the best on the validation set;

Run the final evaluation round and confirm the obtained results on the test set.

Dataset split: Key Takeaways

Data Splitting combines several techniques Data Scientists apply to the data before the training stage to split the dataset into two or more subsets.

The most common approach is splitting the data into three subsets – training, validation, and test.

The training set is the subset of the original dataset used to train the model;

The validation set is the subset of the original dataset used to evaluate the model’s performance during training;

The test set is the subset of the original dataset used for the final assessment of the model’s performance.

The most common train/validation/test split ratio is 80/10/10.

You should take a closer look at random and stratification data splitting techniques for intelligent splitting.